This article is about the under-discussed use and misuse of security technology, use and misuse of statistics software, and use and misuse of people in projects that use project management applications. These matters can be at the heart of why some projects fail, just as easily as those other matters more commonly examined in palimpsests on the art of project management.

Today, relatively low-cost, trusted, security technology is readily available and easy to use. At the same time, powerful statistical tools that can be used to analyze situations under risk—often very effectively—by individuals with little or no understanding of the advanced statistics or decision theory upon which these tools are based are available. And, with business globalization, people (distributed project-team members) have become, to a much greater extent, a fungible resource.

Figure 1: Three issues that can contribute to a project’s success or failure.

Security

Enterprise-level project management systems typically include, but are not limited to, the collaborative use of packages such as:

- Microsoft Project Server

- Microsoft Project Professional

- Microsoft SharePoint Portal Server

- Microsoft SQL Server

- Microsoft Exchange

- Microsoft Visual Studio Team Suite (used to develop custom Web Parts for SharePoint)

- Microsoft Team Foundation Server (used for further collaboration among developers)

- Palsade @Risk

Each of these applications has plenty of endogenous security built in, right out of the box. My concern in this article is the exogenous security that you must provide, in addition, so that unauthorized individuals don’t have access to your project management applications and data. Security is a big, open-ended subject. What I’ll do here is make the point that you can’t assume that, just because you implement a number of layers of de rigeur, “by-the-book” security practices, your data is safe.

To that end, I’ll focus on system authentication and authorization and data encryption using digital certificates (the foundation of Public Key Infrastructure, or PKI). These are electronic credentials, issued by a certification authority (CA), that are associated with a public and private key pair. The certification authority certifies that the person who has been issued a digital certificate is indeed who he or she claims to be (see Reference 1 for further details). First, a little background information on, and then, how you might get a false sense of security from using PKI.

Digital certificates are important because today’s password authentication schemes are little more than security placebos. They perversely inspire abuse, misuse, and criminal mischief by deliberately making users the weakest link in the security chain. You’re far more secure with a layered approach to authentication—one that starts with a digital certificate.

More than any other security protocol or technology available today, PKI can define trust in a granular way. You can use its strengths to protect information about the status of your project from outside competitors or even inside personnel who might misuse information about your work-in-process. But, because of PKI’s mystique, the all-too-frequent careless use of PKI can lead to unwanted consequences. (See Reference 2 for an account of what to believe and what to disbelieve about what you’ve been told by PKI marketers.) Today, the user interface to your PKI-based security system often starts with his or her use of a smart card.

A smart card is a programmable device containing an integrated circuit that stores your digital certificate. These portable cards serve as positive, non-refutable proof of your identity during electronic transactions. As such, they allow you to digitally sign documents and e-mail messages, encrypt outgoing e-mails to be deciphered only by their intended recipients, authenticate into a domain (certificate logon), and automatically log into Internet and Intranet Web sites. To use a smart card, a user must be issued a smart card certificate by his or her certificate authority. Smart cards traditionally take the form of a device the size of a credit card that is placed into a reader, but can they can also be USB-based devices or integrated into employee badges. And, smart cards can be combined with a PIN (which you can think of as a password) to provide two-factor authentication. Physical possession of the smartcard and knowledge of the PIN must be combined to authenticate successfully.

So far, so good; but, there’s more. The U.S. National Institute of Standards and Technology (NIST) specifically states as one of the many features that smart card chips are “tamper resistant.” However, a student at Princeton University, Sudhakar Govindavajhala, heated a smart card with a lamp and exploited it (http://news.com.com/2100-1009_3-1001406.html). Heating caused a bit in the internal state that controls some security processing to fail, thus giving access to data that was previously secured. Smart cards are a great way to add a second method of identity authentication. But, the premise that a smart card is more secure than any other—as this story shows—is not always correct.

Project management files can be saved by using the security features found in the database. But, copies of these files sometimes exist outside of the database and should be protected using another function of PKI—encryption. But, be forewarned: You can’t know for certain that an encrypted file is secure. For one thing, the Google desktop search can get into your encrypted files without the password. The Google desktop search (beta .9) does not actually get around the encryption. It simply indexes the information while you are logged in. When you are logged in, you automatically have access to the encrypted files and so do any programs running on the machine under you. So much for counting on encryption to protect your stolen laptop!

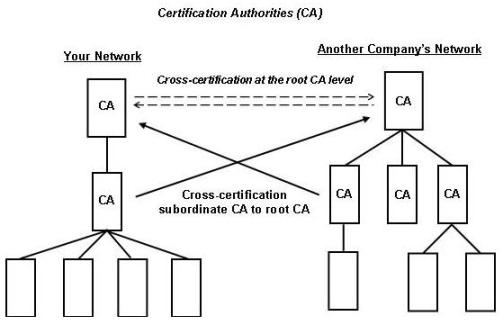

And, people from other companies or other branches of your own company—with certification authorities of their own—sometimes may collaborate with you on your project. The resulting need for interconnectivity inevitably leads to users of different PKIs needing to communicate with each other (see Figure 2). Reciprocal (“cross”) certification allows clients of two separate certification authorities to interact securely. Because certification standards may vary from one PKI to another, before establishing a cross certification process, the two PKIs should compare policies to ensure the appropriate degree of trust exists between them.

Figure 2. Qualified Subordination to Limit Trust to Specific CAs

In some situations, two organizations may want to restrict the cross-certification to specific CAs or to specific portions of the CA hierarchy; for example, when an organization uses outside consulting services. If cross-certification were implemented at the root level (as shown by the dashed arrows in Figure 2), the consultants would have complete access to your network. So, cross-certification at subordinate CAs (as shown by the solid arrows in Figure 2) should be used to restrict their access to those parts of your network actually required. Lastly, the cross-certification can be qualified—that is, limited to certain uses. This process, called qualified subordination, is effective in managing and limiting trust. See Reference 1 for more on cross certification. And, an easy-to-read, non-technical, and very comprehensive account of these and other security issues is available at http://www.netcollege.co.kr/marketing_team/2part/CISSP_Summary_ver_2_0.pdf. Bottom line: Relying on endogenous security without understanding the shortcomings of exogenous security can and will give you a false sense of security.

Statistics

It goes without saying that the correct use of statistics (or any other “science”) can be helpful in project management. And, today, project simulation software with plug-ins that integrate statistical tools that work seamlessly with project data make large-scale project modeling seem relatively easy. But, that can be either a blessing or a problem: a blessing when you use this technology to reflect into your model the correct statistical nature of the processes that will be at play in your next project or a problem when you—as so many do—presume incorrectly to know the underlying statistical nature of the processes that will be at play in your next project. What you can know with certainty is the statistical nature of past projects. And, to the extent that your next project is like them, new statistical estimates based on this historical data make a good deal of sense. But, all too often, your upcoming project will have a brand new distribution of possible outcomes, because the nature of its tasks is different, the human and/or other resources are different, and so forth. Before cautioning you further about some of the pitfalls of using statistical tools, I want to say that I am an advocate of using them and, even when their use is not indicated, of thinking in statistical terms about project management.

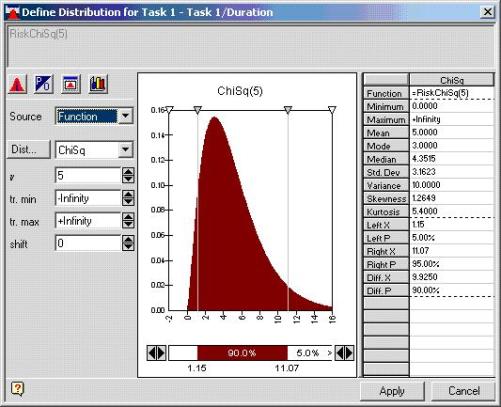

Figure 3: @Risk for Microsoft Project used to simulate the statistical nature of work, cost, and so on.

The best-known project simulation technique is the Monte Carlo simulation. But, just as Game Theory (another advanced statistical methodology that’s used by some of the top poker players) can’t guarantee success at cards, Monte Carlo simulation can’t guarantee that your project will be finished under budget, ahead of schedule, or with the highest possible quality.

In Monte Carlo simulation, for tasks that are hard to predict, you specify the lower and upper limits of an estimate and choose a probability curve between those limits (refer to Figure 3). Some tasks are renowned for their wide range between the lower and upper limit; for example, debugging code in a software development project.

The simulating software generates estimates for all the tasks using these parameters. It uses number generators that produce estimates that comply with the range and the distribution curve you’ve chosen for the estimate. Then, the Monte Carlo simulator creates the first version of the entire schedule and calculates the Critical Path. The software does this repeatedly and finally calculates average project duration and probabilities for a range of finish dates. Naturally, Monte Carlo simulation can be applied to other sets of random data such as the time-of-arrival of materials or funding. See Reference 3 for further details.

As already stated, one problem with this process is that it depends on your choosing the correct historical data to use for the prediction of future events. And, nothing in your software can account for learning curves—the time required for a person to become effective when applying a new skill. You can, of course, choose the level of probability you feel comfortable with, and derive the project costs, finish date, and so forth, or you can let management choose these probabilities for you. But, humans are notorious for coming up with estimates that are self serving or self deluding.

These shortcomings not withstanding, tools such as @Risk for Microsoft Project from Palisade, Inc. also can answer questions like “What is the probability we will finish on schedule?” using detailed statistics from a simulation. @Risk can give you a critical Indices report on the probability that a task will fall on the critical path, as well. This can identify critical tasks that Microsoft Project alone would miss! Sensitivity and scenario analyses identify the factors and situations that could seriously impact your project. These statistical analyses can help you predict “What are the key factors that could make our project go over budget or past deadline?”

Finally, a synergy occurs when your simulation software adds logic to statistics. So, for example, @Risk puts probabilistic branching, If/then conditional modeling and the like at your fingertips which, to a great extent, give you the feeling that you’re “flying on autopilot.” But, you’re not. My admonition that nobody can know the probability of future events with certainty still holds. Use these powerful statistical and logical tools, but use them cautiously.

People

Management needs to be able to plan and control the activities of the organization. At the same time, people run organizations—be they senior management, project managers, or team members who can perform with higher or lower levels of efficiency, creativity, and job satisfaction. In the past, project management focused on “management,” which implied a top down view of how projects are conducted.

However, studies of weaving mills in India and coal mines in England in the early 1950s discovered that work groups could effectively handle many production problems better than management if they were permitted to make their own decisions on scheduling, work allocation among members, bonus sharing, and so forth. This was particularly true when there were variations in the production process requiring quick reactions by the group or when the work of one shift overlapped with the work of other shifts. These findings apply, although not always directly, to the people who contribute to large-scale, complex, often-distributed projects such as those typically scheduled by tools like Microsoft Project and Palisade’s @Risk. But, these people don’t work in a vacuum:

At one end of the spectrum, opposed to the “autonomous” work groups described above, are toxic project managers—those who tightly control and manipulate others for their own aggrandizement. They can actually degrade the quality of work, morale, and even the stability of an organization. Organizations that tolerate or reward toxic behavior are heading for an inevitable fall, because it usually creates tension between labor and management that consumes the energy of both.

Then, in contradistinction to the effective work-group members described above, solitary problem solvers who excel at figuring out how to handle “nontrivial” tasks are found throughout many organizations. Forcing these “loners” to work by team rules can neutralize the potential contribution of these valuable members of your organization.

So what’s all this talk about “good” team members and “bad” project managers got to do with project management software and systems? Just this: The savings of time (a.k.a. money) made possible by the proper use of today’s powerful project management software and systems can be dwarfed by the economic losses that can follow from not considering the wants, needs, and “psychological” makeup of the people who do the actual work that your software attempts to model and the behavior of the project managers who run these applications.

Conclusion

What’s the bottom line? That is, how can you measure the net effect of how well the people in your organization—all of them—are getting along and working with one another and, also, how well you’re using the tools and computer technologies available today to model and keep confidential their work on your projects? Often, it’s the people issues that have a bigger impact on your ROI (return on investment) than the tools and computer technologies. For example, just one disgruntled employee can neutralize the protection thought to be provided by your investment in PKI technology. There are, of course, sophisticated ways to measure how well you’re doing (see Reference 4 for further details). However, you often can get a pretty good idea of how your project management is doing overall, through the use of some conspicuously low-tech metrics such as the rate of employee turnover, whether or not your customers return with future jobs, and whether or not your management responds favorably to your next requests.

References

- Komar, B. (2004) Windows Server 2003 PKI and Certificate Security: Microsoft Press

- Ellison, C., Schneier, B. (2000) “Ten Risks of PKI: What You’re not Being Told about Public Key Infrastructure.” Computer Security Journal, XVI(1).

- Cooper, D., Grey, S., Raymond, G, and Walker, P. (2005) Managing Risk in Large Projects and Complex Procurements: John Wiley & Sons.

- Goodpasture, J. (2004) Quantitative Methods in Project Management: J.Ross Publishing

- Peshkova, G., Kennermer, B. (2005) Microsoft Office Project Server 2003: Sams Publishing

About the Author

Marcia Gulesian is Chief Technology Officer of Gulesian Associates, a consulting firm that has advised corporations, universities, and governments worldwide. She is author of more than 100 articles on software development, its economics, and its management. You can email Marcia at marcia.gulesian@verizon.net.