Data centers power the global economy. They house the systems, equipment, applications and data that make it possible to do business in the modern world.

Experts estimate that the total number of data centers globally exceeds 8.6 million, with more than 3 million in the U.S. That total has been increasing dramatically over the past two decades thanks to the expansion of the Internet and online business. However, some analysts believe that the number of data centers could begin to decline this year thanks to the rise of the public cloud and co-location. It’s not that the amount of data center space is declining — it’s just the opposite. But workloads are moving into fewer, larger data centers, including those operated by the world’s largest technology companies.

Currently, the largest data center in the world is a 7.2 million-square-foot facility in Nevada known as The Citadel Campus. It uses up to 650 MW of power, all of which comes from renewable energy sources. It is operated by Switch, one of the world’s leading data center providers.

However, most data centers are much smaller. Many organizations operate their own data centers, which vary significantly in size. So what qualifies a facility to be called a “data center”? And what are the typical features of a data center?

What is a Data Center?

In the simplest of terms, a data center is any facility that houses centralized computer and telecommunications systems. It can be as small as a tiny server closet or a large standalone building with hundreds of thousands or even millions of square feet of space. Another option is a modular data center, a self-contained integrated unit often housed in a shipping container for portability and scalability.

A typical data center includes a variety of IT infrastructure, including servers to provide computing power, storage (either standalone devices or converged with the servers) and networking gear to connect the systems to the Internet or directly to an organization’s other systems.

A large data center also requires a variety of supporting systems in order to allow the IT infrastructure to function. For example, it needs an adequate power supply to provide enough electricity for all the computers housed in the facility. In general, data centers are connected to the electrical grid, although some have their own on-site power generation. In addition, most data centers have backup power supplies, such as batteries and/or diesel generators, for use in case of an emergency.

Because all those servers generate a lot of heat, cooling is also a significant concern. Servers generally require moderate temperatures in order to function properly, so large data centers may need elaborate liquid- or air-based cooling systems in order to maintain the proper environment. In addition, they often have sophisticated fire suppression technology.

Data centers also need control centers where staff can monitor the performance of the servers as well as the physical plant. They also often have security measures that strictly control access to the facility.

Image Source: Google

History of the Data Center

The first data center was built to house the first real computer ever created — the Electronic Numerical Integrator And Computer, or ENIAC. The U.S. Army Ballistic Research Laboratory built ENIAC in 1946 in order to store codes for firing artillery. The vacuum tube-based machine required 1,800 square feet of floor space and 150 KW of power to provide just 0.05 MIPS of computing power.

In the 1950s and 60s, computing transitioned from vacuum tubes to transistors, which took up much less space. Still, the mainframe computers of the time were so large that most took up an entire room. Many of the data centers of this era were operated by the government, but companies were also beginning to invest in computers. At the time, the cost for a computer averaged around $5 million, or near $40 million in today’s money. Because of the high price tag, some organizations chose to rent computers instead, which cost around $17,000 per month ($131,000 in today’s currency).

In the 1970s, computing took several leaps forward. Intel began selling microprocessors commercially, and Xerox introduced the Alto, a minicomputer with a graphical interface that paved the way toward the dominance of the PC. In addition, Chase Manhattan Bank built the world’s first local area network. Known as ARCnet, it could connect up to 255 computers. Companies of all types became more interested in new air-cooled computing designs that could be house in offices, and interest in mainframes housed in separate data centers began to wane.

In the 1980s, PCs took over the computing landscape. While some large technology companies, notably IBM, continued to build data centers to house supercomputers, computing largely moved out of back rooms and onto desks.

But in the 1990s, the pendulum swung the other way again. With the advent of client-server computing models, organizations again began setting up special computer rooms to house some of their computers and networking equipment. The term “data center” came into popular use, and as the Internet grew, some companies began building very large facilities to house all of their computing equipment.

During the dot-com boom of the early 2000s, data center construction took off. Suddenly, every organization needed a website, and that meant they needed Web servers. Hosting companies and co-location facilities began cropping up, and it soon became common for data centers to house thousands of servers. Data center power consumption and cooling became much bigger concerns.

Since then, the trend toward cloud computing has led some organizations to reduce the number of servers at their in-house data centers and consolidate into fewer facilities. At the same time, the major public cloud providers have been constructing extremely large, energy-efficient data centers.

Data Center Design

When deciding where and how to build a new data center, organizations take a number of elements into consideration, including the following:

Location

Because of the pervasiveness of high-speed connectivity. Organizations can choose to locate their data centers almost anywhere in the world. In general, they look for locations that will help them achieve fast performance and low cost while meeting their compliance requirements and minimizing risk.When data centers are considering how location may affect performance, the discussion often centers around latency. Although high-speed networks can transmit data anywhere in the world very quickly, it does take longer for data to get to a facility that is far away than to one that is close by. For this reason, organizations often build data centers close to their headquarters or office locations and/or close to high-speed transmission lines.

In order to keep their costs low, organizations look for regions that have a favorable tax system and inexpensive real estate. They also look for low-cost power and low temperatures. Colder climates allow organizations to use outside air for cooling, which can have a big effect on overall costs.

Some governments, notably those in Europe, require that data from users in a particular country be stored in the same country. Complying with these laws can sometimes drive data center location decisions as well.

In addition, organizations also consider risk when deciding where to build data centers. For example, areas that are susceptible to frequent hurricanes, earthquakes or tornadoes might not be the best choices for a data center that will be running mission-critical applications. Proximity to an organization’s other data centers might also be a consideration, particularly when it comes to facilities that are used for disaster recovery (DR) purposes. DR sites need to be close enough to the main data center to minimize latency, but far enough away that they are unlikely to be affected by the same events.

Power Supply

For any data center, energy is one of the largest operational expenses. That’s one of the reasons why many enterprises, including Microsoft, Yahoo and Dell, have chosen to build their facilities in Grant County, Washington. This rural part of Washington State boasts average energy costs of just 2.88 cents per kWh, some of the lowest in the nation. In addition, the county is home to two hydroelectric dams, meaning the energy is both plentiful and renewable, which is very important to some enterprises.

Besides chasing low utility costs, organizations also seek ways to make their data centers more energy efficient. As part of that effort, they often measure their power usage effectiveness or PUE. A PUE rating of 1.0 means that all of the power being supplied by the energy utility is being used to perform work. In general, a PUE under 2.0 is pretty good for a data center, with numbers closer to 1.0 being exceptional. Facebook designed its data center in Prineville, Ore., to have a PUE of 1.07, and Google boasts that its data centers have an average PUE of 1.12.

But energy efficiency is only part of the story — organizations also need to make sure that their data centers have an uninterrupted supply of power. To that end, large data centers often have access to multiple sources of power and an uninterruptible power supply (UPS) system that ensures the quality and consistency of the energy coming in.

Many data centers have redundant connections to more than one sector of the power grid. That way, if the electric utility suffers an outage in one part of its grid, the data center may still be able draw power from another part of the grid.

As an added backup, many large data centers have batteries that can power the facility’s servers and other equipment for a few minutes until backup generators can come online. These enormous generators usually run on diesel fuel and can power the facility until an emergency is passed, although they may not provide enough power to meet the facility’s peak demands.

Cooling

Cooling is a huge contributor to the energy usage at a data center. As anyone who has held a laptop in his lap for a long period of time can attest, computing systems can generate a lot of heat. If you put a whole lot of servers into one room, you’re going to get a whole lot of heat. But most servers aren’t designed to function in very warm environments. In general, they function best in the temperatures where human beings feel most comfortable — between 70 and 80 degrees Fahrenheit. Keeping data centers in that range using traditional air conditioning systems can be very expensive, so organizations are constantly looking for innovative ways to keep their facilities cool.

In some cases, organizations have decided that the easiest option is just to let the data centers get hot. They invest in servers guaranteed to function at temperatures in the 90s or even above 100 degrees Fahrenheit. The obvious downside of this arrangement is for the human staff. Maintaining or replacing equipment in that kind of heat can be miserable. Plus, servers that can operate at higher temperatures may be expensive.

Another approach to the problem involves building the data center in a cold place and leveraging the outside air. Some organizations, including Facebook, have gone so far as to build data centers above the Arctic Circle. But for logistical reasons, this option isn’t possible for every organization, which is why many turn to other solutions.

In the early days of data centers, many facilities had raised floors that allowed air to flow underneath. Small holes in the flooring allowed cool air, provided by the air conditioning systems, to flow upward and maintain the correct temperature near the servers. While some facilities still use this design, it can be inefficient for large data centers, which often turn to other methods.

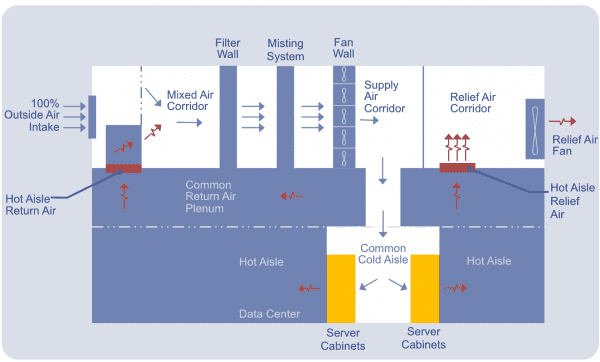

One of the most common approaches today is to use rack and enclosure systems that separate the data center into hot aisles and cold aisles. The heat generated by the servers is directed to the back of the server racks, where it is contained and exhausted to the outside. Cold air from outside the facility or from the air conditioning units feeds the cold aisles, helping maintain the appropriate temperature.

Some data centers use the hot aisle/cold aisle layout in conjunction with liquid-based systems. These solutions circulate water or other fluid near the racks in order to absorb heat and cool the servers.

Image source: The Open Compute Project

Data Center Access

Security is also a major concern for data centers. Knocking a major data center offline could cause significant damage to the U.S. economy, making data centers a terrorist target. More significantly, these facilities house extremely sensitive data that could be of interest to competing organizations, foreign governments or criminals. Data centers are therefore targets, not only for cyberattacks, but also for physical attacks. As a result, most organizations carefully control access. Although Google and Facebook have released images of their data centers, many large cloud computing and colocation providers do not allow anyone in the press to see the inside of their facilities.

For those who are allowed inside, data centers have access control systems, sometimes including biometric identification controls. In general, these systems are also auditable so that organizations can see who has been inside the data center.

Types of Data Centers

Because data centers encompass such a wide range of sizes and capabilities, several different organizations have devised ways for categorizing different types of data centers. Two of the best known of these categorization methods were created by the Telecommunications Industry Association (TIA) and the Uptime Institute.

TIA offers certification that data centers can obtain to demonstrate that their facilities conform to industry-recognized standards. Its ANSI/TIA-942 Certification recognizes four different rating levels. The exact specifications are highly detailed and copyrighted, but the list below offers a general overview:

- Rated-1 is for the most basic data center facilities, essentially non-redundant server rooms.

- Rated-2 is for data centers that have redundant components (i.e., failover servers and storage) and a single distribution path connecting the IT infrastructure.

- Rated-3 is for data centers that have both redundant components and redundant distribution paths and that allow components to be removed and replaced without shutting the data center down (concurrent maintenance).

- Rated-4 is for the most fault tolerant sites, which have redundant components, redundant distribution paths and concurrent maintenance capabilities as well as being able to withstand one fault anywhere in the facility without shutting down.

The Uptime Institute offers a similar certification that classifies data centers into four different tiers that are very similar to TIA’s levels. However, the tier standards also specify uptime targets, as well as redundancy levels for networks and systems. The uptime targets break down as follows:

- Tier 1 — 99.671 percent uptime (28.8 hours of downtime per year)

- Tier 2 — 99.749 percent uptime (22 hours of downtime per year)

- Tier 3 — 99.982 percent uptime (1.6 hours of downtime per year)

- Tier 4 — 99.995 percent uptime (26.3 minutes of downtime per year)

For obvious reasons, only a few data centers are able to meet the Rated-4 or Tier 4 standards.

Regardless of their size or capabilities, data centers provide critical capabilities for modern businesses. To learn more about data centers, visit Datamation.com/data-center.