Artificial intelligence (AI) is in the midst of an undeniable surge in popularity, and enterprises are becoming particularly interested in a form of AI known as deep learning.

According to Gartner, AI will likely generate $1.2 trillion in business value for enterprises in 2018, 70 percent more than last year. “AI promises to be the most disruptive class of technologies during the next 10 years due to advances in computational power, volume, velocity and variety of data, as well as advances in deep neural networks (DNNs),” said John-David Lovelock, research vice president at Gartner.

Those deep neural networks are used for deep learning, which most enterprises believe will be important for their organizations. A 2018 O’Reilly report titled How Companies Are Putting AI to Work through Deep Learning found that only 28 percent of enterprises surveyed were already using deep learning. However, 92 percent of respondents believed that deep learning would play a role in their future projects, and 54 percent described that role as “large” or “essential.”

But while deep learning seems to have tremendous benefits, this form of artificial intelligence is still very young. Researchers and enterprises need to overcome a number of hurdles if the technology is going to live up to its early promise.

What Is Deep Learning

To understand what deep learning is, you first need to understand that it is part of the much broader field of artificial intelligence. In a nutshell, artificial intelligence involves teaching computers to think the way that human beings think. That encompasses a wide variety of different applications, like computer vision, natural language processing and machine learning.

Machine learning is the subset of AI that gives computers the ability to get better at a task without being explicitly programmed. Enterprises use machine learning to power fraud detection, recommendation engines, streaming analytics, demand forecasting and many other types of applications. These tools improve over time as they ingest more data and get better at finding correlations and patterns within that data.

Deep learning is a particular kind of machine learning that became much more popular around 2012 when several computer scientists published papers on the topic. It’s “deep” because it processes data through many different layers. For example, a deep learning system that is being trained for computer vision might first learn to recognize the edges of objects that appear in images. That information gets fed to the next layer, which might learn to recognize corners or other features. It goes through that same process over and over until the system eventually develops the ability to identify objects or even recognize faces.

Most deep learning systems rely on a type of computer architecture called a deep neural network (DNN). These are roughly patterned after biological brains and use interconnected nodes called “neurons” to do their processing work.

Deep Learning Use Cases

Deep learning is currently being used to power a lot of different kinds of applications. Some of the most common include the following:

- Gaming: Many people first became aware of deep learning in 2015 when the AlphaGo deep learning system became the first AI to defeat a human player at the board game Go, a feat which it has since repeated multiple times. According to the AlphaGo website, the AI used moves that “were so surprising they overturned hundreds of years of received wisdom, and have since been examined extensively by players of all levels. In the course of winning, AlphaGo somehow taught the world completely new knowledge about perhaps the most studied and contemplated game in history.”

- Image Recognition: As previously mentioned, deep learning is particularly useful for computer vision applications. Microsoft, Google, Facebook, IBM and others have successfully used deep learning to train computers to identify the contents of images and/or to recognize human faces.

- Speech Processing: Deep learning is also good at recognizing human speech, translating text into speech and processing natural language. It can help identify the meaning of words from their context, and it enables chatbots and voice assistants like Siri and Cortana to carry on conversations with users.

- Translation: The next logical step after training a deep learning system to understand one language is to teach it to understand multiple languages and translate among them. Several vendors have done just that and now offer APIs with deep learning-based translation capabilities.

- Recommendation Engines: Users have become accustomed to websites like Amazon and services like Netflix offering them recommendations based on their previous activity. Many of these recommendation engines are powered by deep learning, which allows them to become better at making recommendation over time and enables them to find hidden correlations in preferences that human programmers might miss.

- Text Mining: Text mining is the process of running analytics on text. It might, for example, enable people to determine the feelings and emotions of the person who wrote the text or it might extract the major ideas from a document or even compose a summary.

- Analytics: Big data analytics has become an integral part of doing business for most enterprises. Machine learning, and specifically deep learning, promises to make predictive and proscriptive analytics even better than they already are.

- Forecasting: One of the most common uses for analytics is for forecasting upcoming events. Enterprises are using deep learning to predict customer demand, supply chain problems, future earnings and much more.

- Medicine: Deep learning also has a myriad of potential uses in the medical field. For example, it might be better than human radiologists at reading scans, and it could power diagnostic engines that could augment the capabilities of human physicians.

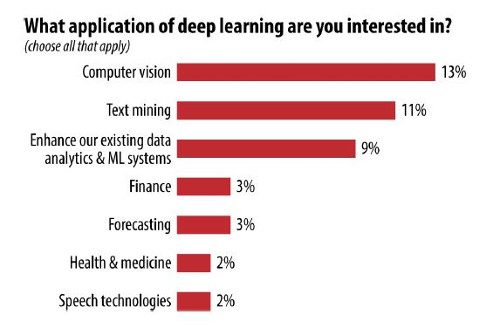

In the O’Reilly survey, respondents said that they were most interested in using deep learning for computer vision, text mining and analytics. Expect the list of potential use cases to grow as researchers find new ways to apply the technology.

Source: O’Reilly “How Companies Are Putting AI to Work Through Deep Learning” Survey Results

Deep Learning Challenges

While deep learning has impressive capabilities, a few obstacles are slowing widespread adoption. They include the following:

- Skills Shortage: When the O’Reilly survey asked people what was holding them back from adopting deep learning, the number one response was a lack of skilled staff. According to the Global AI Talent Report 2018, “There are roughly 22,000 PhD-educated researchers in the entire world who are capable of working in AI research and applications, with only 3,074 candidates currently looking for work.” Enterprises are attempting to fill this gap by training their existing IT staff, but the process is slow.

- Computing Power: DNNs required highly advanced computer infrastructure, usually high performance computing (HPC) systems with a large number of graphics processing units (GPUs), which are particularly good at the type of calculations necessary for deep learning. In the past, this level of hardware has been too costly for most organizations to afford. However, the growth in cloud-based machine learning services means that organizations can access deep learning-capable systems without the high upfront infrastructure costs.

- Data Challenges: Deep learning can also be hindered by the same sorts of data quality and data governance challenges that hamper other big data projects. Train a deep learning model with bad data introduces the very real possibility of creating a system with inherent bias and incorrect or objectionable outcomes. Data scientists need to take care that the data they use to train their models is as accurate and as unbiased as possible.

- Critics: Some people believe that deep learning is inherently dangerous because it magnifies the inherent biases of the people who create the systems. Others say that while deep learning can solve some problems, the technology has fundamental limitations that will prevent it from being useful for many applications. While these voices are unlikely to halt the adoption of deep learning, they do seem to be slowing the process somewhat.

Open Source Deep Learning Tools

Many of the most common deep learning and artificial intelligence tools are available under an open source license. Some of the most popular include the following:

- TensorFlow In the O’Reilly survey, 61 percent of respondents said that they were using TensorFlow, and it is easily the most popular deep learning framework available today. It was created by Google and is the basis for many of the deep learning cloud computing services.

- Keras This was the second most popular deep learning tool in the O’Reilly study. It’s a Python-based neural network API that integrates with TensorFlow, Theano and the Microsoft Cognitive Toolkit.

- PyTorch Number three in the O’Reilly survey, PyTorch is a Python-based deep neural network framework that incorporates the Torch tensor library. It offers GPU acceleration, flexibility and speed.

- Caffe Created by Berkeley AI Research (BAIR), Caffe is an open source deep learning framework that boasts expressive architecture, extensible code, speed and a robust community. According to its website, it “can process over 60M images per day with a single NVIDIA K40 GPU.”

- Caffe2 Developed by Facebook, Caffe2 builds on the original Caffe and promises high scalability. It is lightweight and modular, and the website has an extensive collection of pre-trained models to speed application development and deployment.

- MXNet This Apache Incubating project aims to speed up calculations, especially the calculations performed by DNNs during deep learning. It boasts “high performance, clean code, access to a high-level API, and low-level control.”

- Gluon This project, originated by AWS and Microsoft, provides an interface for MXNet. It is also expected to be included in future Microsoft Cognitive Toolkit Releases.

- Microsoft Cognitive Toolkit Formerly known as CNTK, this project is “a free, easy-to-use, open-source, commercial-grade toolkit that trains deep learning algorithms to learn like the human brain.” It supports Python, C++ and BrainScript programming languages, as well as reinforcement learning, generative adversarial networks, supervised and unsupervised learning.

- H2O Used by companies like ADP, CapitalOne, Cisco, Progressive, Comcast, PayPal and Macy’s, H2O claims to be the “#1 open-source machine learning platform for enterprises. It offers highly advanced algorithms and in-memory processing for fast performance with large datasets. The company offers commercial products based on the open source project.

- Theano This Python library has been used by many deep learning and artificial intelligence applications. It provides tight integration with NumPy, transparent use of GPUs, efficient symbolic differentiation and more.

- DeepDetect This open source deep learning server is based on Caffe, TensorFlow and DMLC XGBoost. Well-known users include Airbus and Microsoft.

- DSSTNE Short for Deep Scalable Sparse Tensor Network Engine, DSSTNE (pronounced like destiny) is an AWS library for creating deep learning models. It is designed for multiple GPUs, large layers and optimal performance with sparse data.

- PaddlePaddle Short for PArallel Distributed Deep Learning, PaddlePaddle powers many Baidu applications. It claims to be easy-to-use, efficient, flexible and scalable.

- Deeplearning4J this deep learning library for the Java Virtual Machine claims to be the first project of its kind for Java and Scala. It integrates with Hadoop and Spark and supports GPUs and CPUs. A commercial version is available through Skymind.

- Chainer Chainer’s website describes it as “powerful, flexible and intuitive,” as well as able to “bridge the gap between algorithms and implementations of deep learning. It has won several awards.

Cloud AI/Deep Learning Services

|

Vendor |

Deep/AI Learning Tool |

Description |

|

Amazon Web Services |

EC2 Instances with deep learning frameworks pre-installed; supports TensorFlow, MXNet, Microsoft Cognitive Toolkit, Caffe, Caffe2, Theano, Torch, PyTorch, Chainer, and Keras |

|

|

Fully managed cloud-based TensorFlow service |

||

|

Deep-learning enabled video camera for developers |

||

|

Google Cloud |

Managed cloud service that supports multiple machine learning frameworks, including TensorFlow and Keras |

|

|

Microsoft Azure |

Hyper-optimized deep neural networks available as a cloud service |

|

|

IBM |

An deep learning service designed for data scientists |

|

|

Deep Cognition |

Deep learning as a service with a drag-and-drop interface and pre-trained models |